I’m adding a second server to my app. What now?

Adding a second server to your app can be a great way to improve your app's performance and/or increase its reliability. However, there are a couple of things you need to keep in mind when adding a second server.

In this article, we'll discuss the key things you need to consider when adding an additional server to your app. We’ll use a Laravel hosted in Laravel Forge as the example here, but the concepts can be applied to any kind of application, not even limited to the PHP language.

Current infrastructure

First, to make sure we are speaking the same language, this is the outline of the current infrastructure. This app is currently running on a server created by Laravel Forge and running on AWS.

Lets Encrypt for the SSL certificate;

Redis (installed on the machine) for sessions, caching and as the queue driver for storing and processing background jobs;

MySQL (installed on the machine) as the database;

Local folder for saving user uploaded content;

Laravel Scheduler using server’s CRON every minute;

Deployments are manually triggered by clicking Laravel Forge’s “Deploy now” button;

1. Load balancer

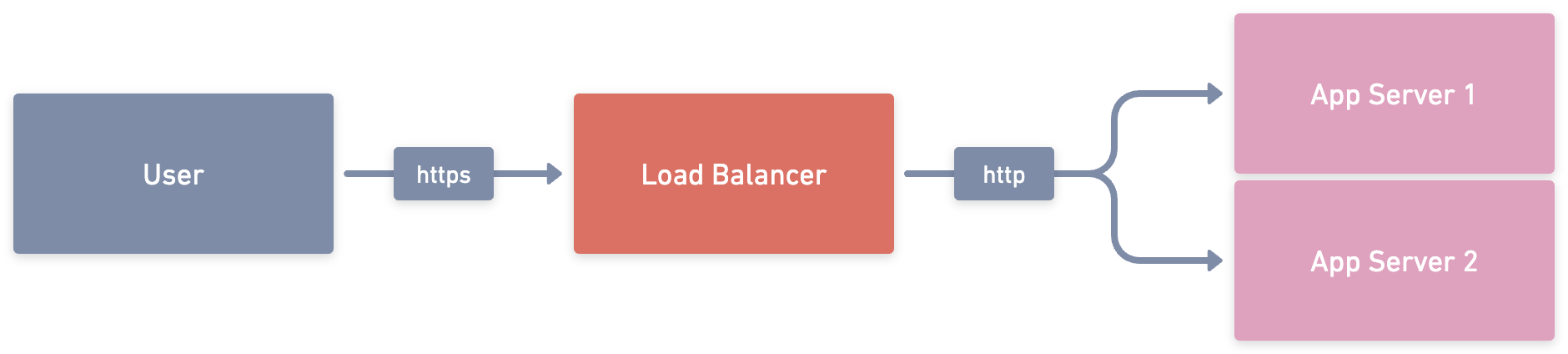

The first thing you will need is a load balancer. This will be the entrypoint of your application, meaning you will point your domain DNS to the load balancer instead of the server directly. The job of a load balancer is, as you guessed, to balance the incoming requests between all the healthy and registered servers.

From now on, every time we mention “App Server”, this will be referring to a single server running our Laravel application.

One of the nice features of a load balancer is the health checks, which serve the purpose of making sure that all connected servers are healthy. If one of the servers fails for some reason, some unscheduled maintenance for example, the load balancer will stop routing requests to that server until the server is up, running, and healthy again.

We recommend using the application load balancer, which gives more robust functionality down the road, if you need it. Application load balancers can route traffic to specific servers based on the requested URL and even route requests to multiple applications. For now, we will have it evenly balance traffic using the round robin method.

Since your domain will now be pointing to the load balancer, your SSL certificate should also be in the load balancer now, instead of in your servers.

2. Database (MySQL), cache & queue (Redis)

Currently, there is one server running our app, local instances of MySQL, and Redis. What happens when the second gets attached to our load balancer?

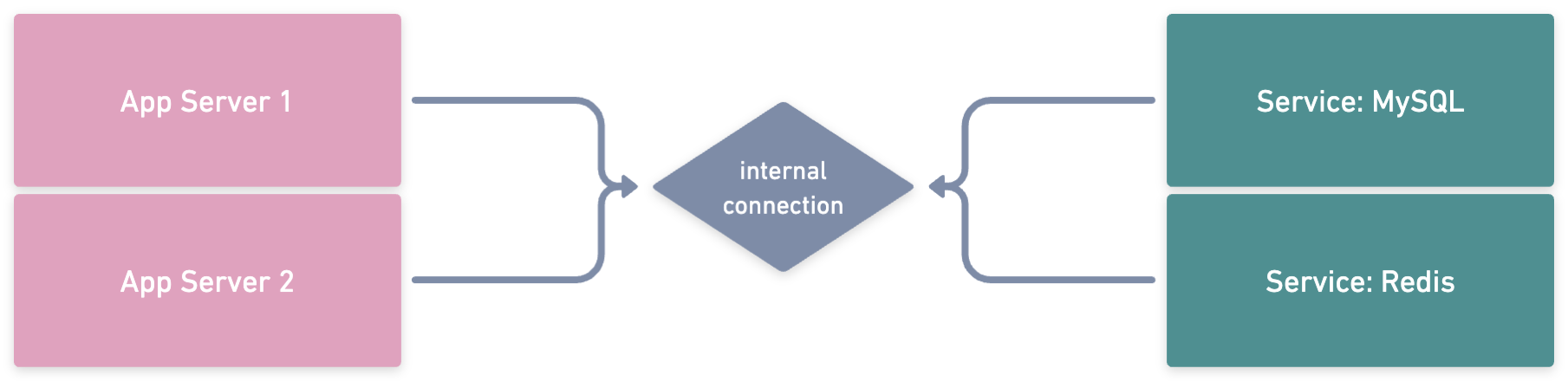

Having multiple sources of truth for our database and caching layers could generate all kinds of issues. With multiple databases, the user would be registered in one server but not the other. With one Redis instance per server, you could be logged in into App Server 1, but when the load balancer redirects you to App Server 2 you would have to sign in again, since your session is stored in the local Redis instance.

We could make App Server 2, or any future App Servers connected to our load balancer, connect to App Server’s 1 services, but what happens when App Server 1 has to go down for maintenance or it unexpectedly fails? One of the reasons to add a second server is to have more reliability and scalability, which does not solve our problem.

The ideal scenario, when we have multiple app servers, is to have external services like MySQL and Redis running in a separate environment. To achieve this, we can use managed services, like AWS RDS, for databases and AWS Elasticache for Redis or unmanaged services, meaning we are going to set up a separate server to run those services ourselves. Managed services are usually a better option if cost is not an issue since you don’t have to worry about OS and softwares upgrades, and they usually have a better security layer.

Let’s imagine we decided to go with managed services for our application. Our Laravel configuration would become similar as this:

-DB_HOST=localhost+DB_HOST=app-database.a2rmat6p8bcx7.us-east-1.rds.amazonaws.com-REDIS_HOST=localhost+REDIS_HOST=app-redis.qexyfo.ng.0001.use2.cache.amazonaws.comAfter everything is set up, our infrastructure would look like this when connecting our App Servers to our services.

3. User uploaded content

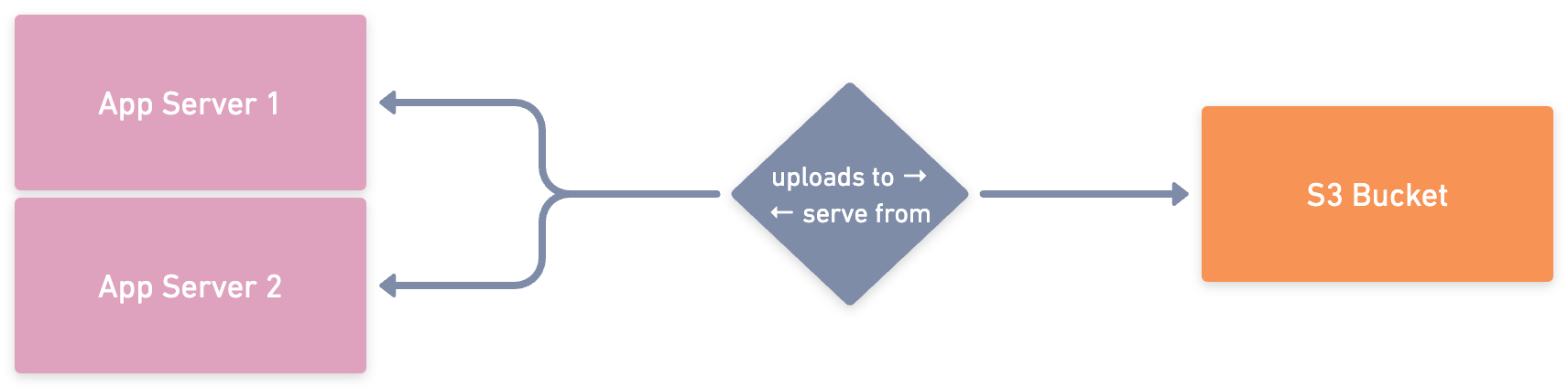

Our application allows users to upload a custom profile picture, which shows up when you are logged in. On our current infrastructure, images get saved in an internal folder in our application and also get served from there. Now that we have multiple App Servers, this would be an issue, since the images uploaded in the App Server 1 will not be present on the second server.

There are a few ways to solve this. One of them is to have a shared folder between your servers (Amazon EFS, for example). If we choose this option, we would have to configure a custom filesystem in Laravel which would point to this shared folder location on our App Servers. While a valid option, this requires some knowledge to set up the disk on the servers, and for every new server you set up, you would have to configure the shared folder again.

We usually prefer using a Cloud Object Storage service instead, like Amazon S3 or Digital Ocean Spaces. Laravel makes it really easy to work with these services, if you are using the File Storage options. In this case, you would only have to configure your filesystem disk to use S3, and upload all your previous user uploaded content to a bucket.

-FILESYSTEM_DISK=local+FILESYSTEM_DISK=s3 AWS_ACCESS_KEY_ID=your-keyAWS_SECRET_ACCESS_KEY=your-secret-access-keyAWS_DEFAULT_REGION=us-east-1AWS_BUCKET=your-bucket-nameAll your user uploaded content will be stored in the same, centralized bucket. S3 has built in versioning, multiple layers of redundancy and any additional app servers we add to our load balancer can use the same bucket to store content.

If your application grows in the future, you can set up AWS Cloudfront, which acts as a CDN layer sitting on top of your S3 bucket, serving your bucket content faster to your users and often cheaper than S3.

4. Queue workers

In step 2, we set up a centralized Redis server, which is the technology we were using to manage our application queues. This will also work for our load balanced applications, but there are a few good options to explore.

If you continue to process your queues on your app servers leveraging the centralized Redis instance, no changes need to be made. The jobs will get picked up by the server that has a worker available to process a job.

Another option is to use a service like AWS SQS, which can relieve some pressure on your Redis instance as your application grows by offloading that workload to another service.

5. Scheduled commands

When running multiple servers behind a load balancer, scheduled commands would run on each server attached to your load balancer by default, which is not optimal. Not only would running the same command multiple times be a waste of processing power, but could also cause data integrity issues depending on what that command does

Laravel has a built-in way to handle this scenario so that your scheduled commands only run on a single server by chaining a onOneServer() method.

$schedule->command('report:generate') ->daily() ->onOneServer();Using this method does require the use of a centralized caching server, so Step 2 is critical to making this work.

6. Deployment

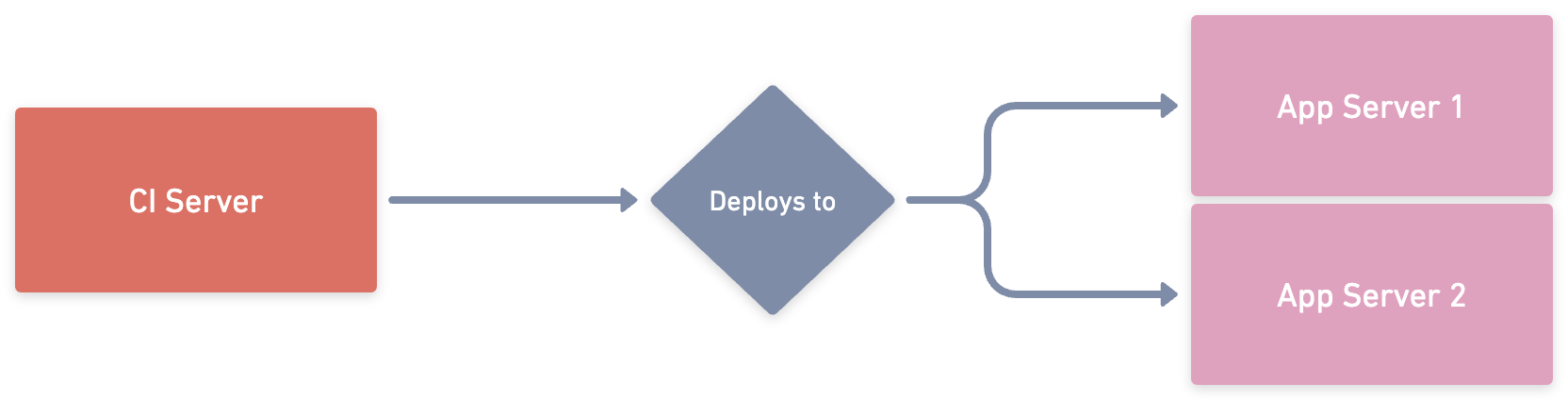

When it comes to deploying your application, you now have so many options and things to consider.

We can still deploy our applications using our previous approach, but now we have to make sure we remember to click the deploy button on both servers. If we forget, we would have our servers running different versions of the application, which could cause huge issues.

With multiple servers, it’s probably time to level up the deployment strategy. There are some very good deployment tools and services out there, like Laravel Envoyer or PHP Deployer. These types of tools and services allow you to automate the deployment process across multiple servers, so you can remove human error from the equation.

If we want to go one level deeper in our deployment process, since we now have 2 app servers, one of the great benefits is that we can temporarily remove one of the servers from the load balancer, and that server will stop receiving requests. This allows us to have zero downtime deployment, where we remove the first server from the load balancer, deploy the new code, put it back into the load balancer, remove the second one and do the same process again. Once server 2 is finished, both servers will have the new code and will be attached to the load balancer. To achieve this, we would use tools like AWS CodeDeploy, but the setup is more complex than our previous options.

Deployment is a very important process of our applications, so if we can automate the deployment using Github Actions or any CI/CD services out there, we are greatly improving the process. Making the deployment process simple and where anyone can trigger a deployment really shows the maturity of the development team and the application.

7. Network & security

One additional benefit we have with the use of a load balancer is that our servers are not the entrypoint of our websites anymore. This means we can only have our servers be internally accessible and/or restricted by specific IPs (our IPs, Load Balancer IPs, etc). This greatly improves the security of our servers since they are not directly accessible. The same can (and should) be done for our database and cache clusters.

To achieve this, we are going to only allow traffic to port 22 from our own IPs (so we can SSH into the server) and we are going to only allow traffic to port 80 from the load balancer, so it can send requests to the server. The same rules apply for our database and cache clusters.

Final thoughts

There are a lot of things to consider when adding additional servers to your infrastructure. It adds more complexity to your infrastructure and workflows, but it also increases the reliability and scalability of your application as well as improves your overall security.

When considered from the beginning of the process, these recommendations are simple to implement and can have a large impact on improving your app.